|

|||

Interactive Visualization of Beethoven's No.14 |

|||

Moonlight is an interactive installation of the visualization of the first movement of Beethoven's No.14 Sonata. It was originally installed at the Texas Fine Arts Association gallery in downtown Austin, TX as part of the 'Digital Face of Interactive Art' exhibition organized by the Austin Museum of Digital Art. |

|||

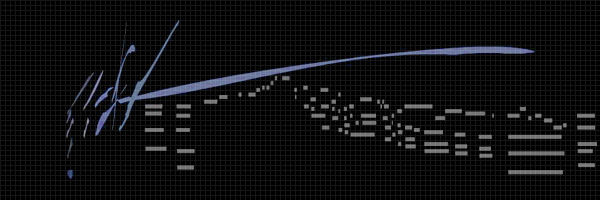

Pseudo Mechanical Universe Moonlight abandons many traditional elements of musical annotation.

|

|||

| This digital piece is assembled through a number of computational steps. First, a Musical Instrument Digital Interface (MIDI) file of the score is created when a human performs the piece on a MIDI enabled piano. For each note played, a series of properties describes the event in elaborate detail. Inscribed details include: the time the note was struck, the velocity of the strike, the length of the sustain, and the pitch of the key.  figure b. the first movement of the moonlight sonata rendered as a Soyez Rocket, sectioned into four measures. The MIDI file is then converted into two parts. First, a digital audio re-recording the performance compressed in MP3; and second, a representation of the note information encoded in XML. The two are recombined with user preferences applied into a real-time, graphic presentation generated by the music's structure. Interaction with the piece allows users to modify the geometry, color, and behavior of the notes. In response, the perceptual, projected environment (through which the particpant physically navigates) changes and evolves. Media Visualization: Flash 5 Audio: MP3 MIDI to XML Conversion: C++ Song Information: MIDI v2 Artisanship Jared Tarbell - design and code Lola Brine - installation Corey Barton - audio production Wesley Venable - piano performance Ludwig Van Beethoven - original composition Stephen Malinowski - inspiration, MAMe Additional Links Screenshots from the visualization engine. Early development images. Photographs from the gallery. |

|||